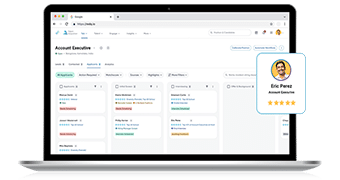

Explore Eightfold’s AI-powered Platform

Join us for this exclusive live demo showcasing our AI-powered Talent Intelligence Platform.

Register for a live demo →

A single AI platform for all talent

Powered by global talent data sets so you can realize the full potential of your workforce.

Explore talent suite →

The ultimate buyer’s guide for a talent intelligence platform

How do you get ahead when talent systems are complex and are made even more complicated by uncertainty in today’s labor market?

Download the guide →

Eightfold AI achieves FedRAMP Moderate Authorization

Eightfold AI’s Talent Intelligence Platform is now FedRAMP® Moderate Authorized, meeting the strict security standards required for U.S. federal agencies.

Read the announcement →

HR’s role in the AI revolution is bigger than you think

Leaders agree talent is key, but few involve HR in AI strategy. Discover how HR can lead the way in shaping AI-driven success in this new Harvard Business Review Analytic Services study.

Get the report →

What every leader needs to know about responsible AI

Responsible AI is more than a compliance checkbox. It’s a strategic imperative for building trust, driving innovation, and shaping the future of work.

Why responsible AI is your competitive advantage →

Responsible Al at Eightfold

We believe in helping everyone see their full potential — and the career opportunities that come with that view.

Explore our commitment to responsible AI →

Agentic AI marks the next era of workforce productivity

The next frontier for enterprise AI isn’t just about producing content, it’s about taking action. Agentic AI represents a new class of intelligent systems.

What agentic AI really means for business →